What easier way to make a film about sockpuppets than using motion capture technology?

Note: This was made to work with Blender 2.92 (respective NodeOSC / Animation Nodes versions) and Brekel 1.46.

Newer versions have been known to have issues and/or not work.

The primary goal of the 3D character animation was to replicate the subtle imperfections of real life puppetry, while allowing for the fine tweaking of the final animation to fit the exact look needed. Since the film had a limited budget, and was entirely in Blender 3D (the CG aspects), we needed a solution that worked well with hand motions without requiring full on motion capture gear.

This is where Leap Motion came into play. It offered a cheap, good quality capture method for hand motion. The problem is there was no (at least at the current time) way to link that data to Blender for both live preview and capture.

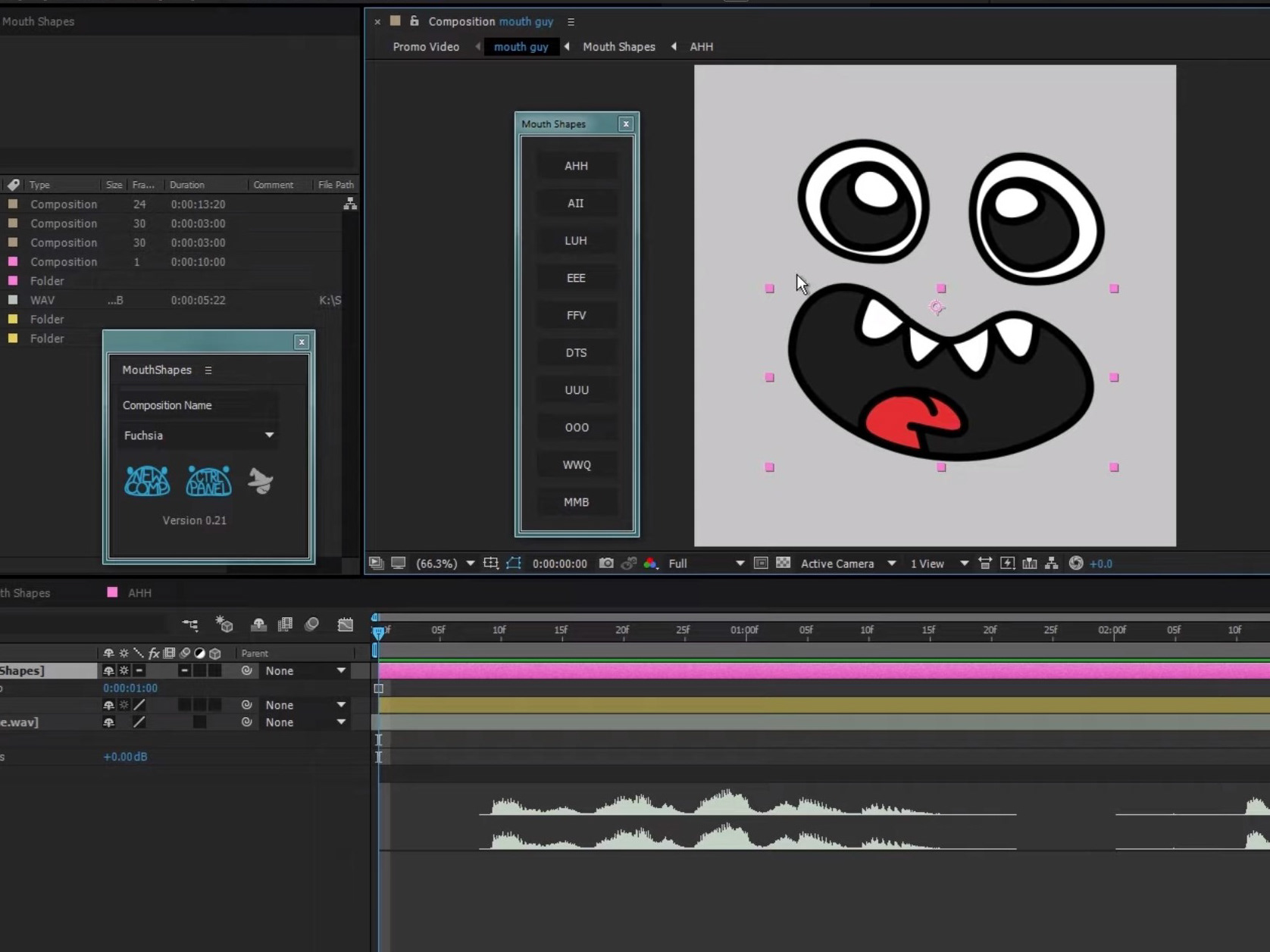

To solve this, I used a handy program called Brekel Hands. It allows you to stream OSC data to your local network. So all that was needed was a way for Blender to capture that data and relay it onto a few empties nulls in a scene.

"Network" settings in Brekel Hands

Lucky for me, there already existed a plugin called NodeOSC that lets you capture the data and apply it to any property you want in Blender. So all that I needed to do was create a bunch of nulls for each joint and link both their position and rotation to the global coordinates from Brekel. Once that was done there were two more problems. The rotation data was wildly offscale to what it was supposed to look like, and there was no way to actually capture the keyframes live all at once.

Rotation is way off to what Blender likes.

These two issues were solved through Animation Nodes. By duplicating the empty null collection of each joint ("animated buffer" in my case), then looping collection A's initial data with a few offsets and scaling to collection B, I got something halfway decent.

Corrected empty collection

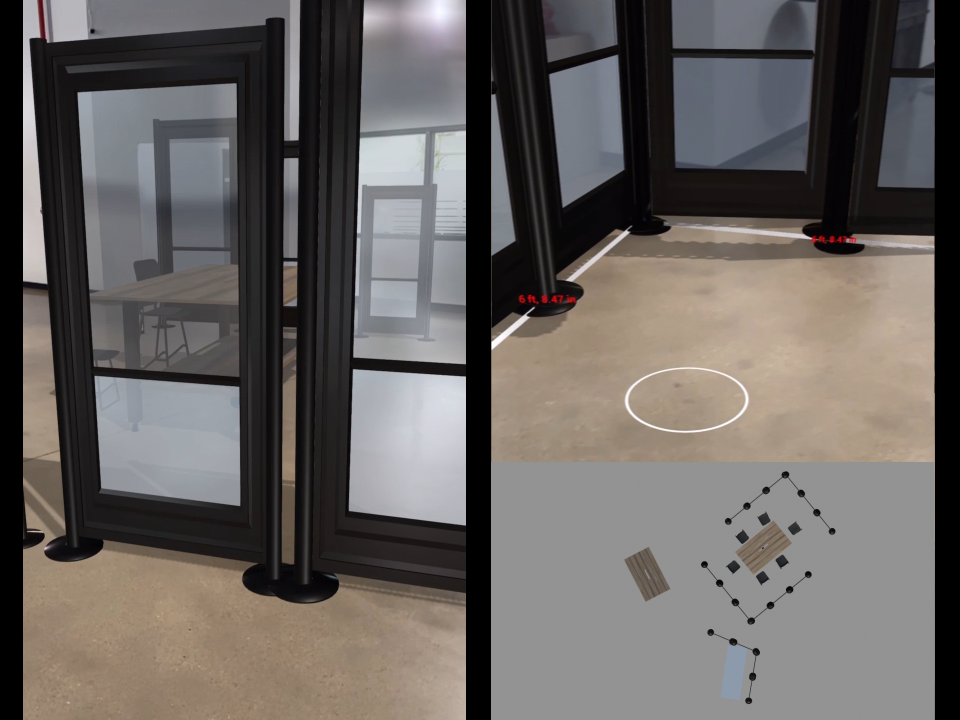

Visualization of points using animation constraints

From there, I needed to find a way to capture the movements. Animation Nodes offers a handy "bake to keyframes" button. Because this all happens live, you need to extend your timeline a lot to allow for all the capture time you need.

After that, all that was left was to rig some characters and drive the respective joints to the nulls I want. Once I got something I liked, I baked all the Blender armature constraints to keyframes using "visual keys". Then we could clean up the mocap how we saw fit.

And alas... mocap puppets!